Datasets

Open Access

Agri-EBV-autumn

- Citation Author(s):

- Submitted by:

- Andrejs Zujevs

- Last updated:

- Tue, 09/07/2021 - 11:05

- DOI:

- 10.21227/524a-fv77

- Data Format:

- License:

1040 Views

1040 Views- Categories:

- Keywords:

Abstract

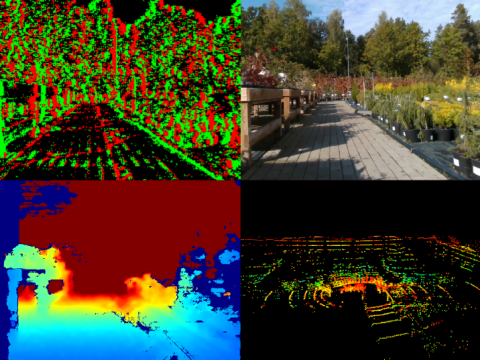

A new generation of computer vision, namely event-based or neuromorphic vision, provides a new paradigm for capturing visual data and the way such data is processed. Event-based vision is a state-of-art technology of robot vision. It is particularly promising for use in both mobile robots and drones for visual navigation tasks. Due to a highly novel type of visual sensors used in event-based vision, only a few datasets aimed at visual navigation tasks are publicly available. Such datasets provide an opportunity to evaluate visual odometry and visual SLAM methods by imitating data readout from real sensors. This dataset is intended to cover visual navigation tasks for mobile robots navigating in different types of agricultural environment. The dataset might open new opportunities for the evaluation of existing and creation of new event-based visual navigation methods for use in agricultural scenes that contain a lot of vegetation, animals, and patterned objects. The new dataset was created using our own custom-designed Sensor Bundle, which was installed on a mobile robot platform. During data acquisition sessions, the platform was manually controlled in such environments as forests, plantations, cattle farm, etc. The Sensor Bundle consists of the dynamic vision sensor, a LIDAR, an RGB-D camera, and environmental sensors (temperature, humidity, and air pressure). The provided data sequences are accompanied by calibration data. The dynamic visual sensor, the LIDAR, and environmental sensors were time-synchronized with a precision of 1 us and time-aligned with an accuracy of +/- 1 ms. Ground-truth was generated by Lidar-SLAM methods. In total, there are 21 data sequences in 12 different scenarios for the autumn season. Each data sequence is accompanied by a video demonstrating its content and a detailed description, including known issues. The reported common issues include relatively small missing fragments of data and the RGB-D sensor's frame number sequence issues. The new dataset is mostly designed for Visual Odometry tasks, however, it also includes loop-closures for applying event-based visual SLAM methods. A.Zujevs is supported by the European Regional Development Fund within the Activity 1.1.1.2 “Post-doctoral Research Aid” of the Specific Aid Objective 1.1.1 (No.1.1.1.2/VIAA/2/18/334), while the others are supported by the Latvian Council of Science (lzp-2018/1-0482).

BibTex citation: @inproceedings{Zujevs2021, author = {Zujevs, Andrejs and Pudzs, Mihails and Osadcuks, Vitalijs and Ardavs, Arturs and Galauskis, Maris and Grundspenkis, Janis}, booktitle = {IEEE International Conference on Robotics and Automation}, title = {{A Neuromorphic Vision Dataset for Visual Navigation Tasks in Agriculture}}, month = {June}, year = {2021}}

The dataset includes the following sequences:

01_forest– Closed loop, Forest trail, No wind, Daytime02_forest– Closed loop, Forest trail, No wind, Daytime03_green_meadow– Closed loop, Meadow, grass up to 30 cm, No wind, Daytime04_green_meadow– Closed loop, Meadow, grass up to 30 cm, Mild wind, Daytime05_road_asphalt– Closed loop, Asphalt road, No wind, Nighttime06_plantation– Closed loop, Shrubland, Mild wind, Daytime07_plantation– Closed loop, Asphalt road, No wind, Nighttime08_plantation_water– Random movement, Sprinklers (water drops on camera lens), No wind, Nighttime09_cattle_farm– Closed loop, Cattle farm, Mild wind, Daytime10_cattle_farm– Closed loop, Cattle farm, Mild wind, Daytime11_cattle_farm_feed_table– Closed loop, Cattle farm feed table, Mild wind, Daytime12_cattle_farm_feed_table– Closed loop, Cattle farm feed table, Mild wind, Daytime13_ditch– Closed loop, Sandy surface, Edge of ditch or drainage channel, No wind, Daytime14_ditch– Closed loop, Sandy surface, Shore or bank, Strong wind, Daytime15_young_pines– Closed loop, Sandy surface, Pine coppice, No wind, Daytime16_winter_cereal_field– Closed loop, Winter wheat sowing, Mild wind, Daytime17_winter_cereal_field– Closed loop, Winter wheat sowing, Mild wind, Daytime18_winter_rapeseed_field– Closed loop, Winter rapeseed, Mild wind, Daytime19_winter_rapeseed_field– Closed loop, Winter rapeseed, Mild wind, Daytime20_field_with_a_cow– Closed loop, Cows tethered in pasture, Mild wind, Daytime21_field_with_a_cow– Closed loop, Cows tethered in pasture, Mild wind, Daytime

Each sequence contains the following separately downloadable files:

<..sequence_id..>_video.mp4– provides an overview of the sequence data (for the DVS and RGB-D sensors).<..sequence_id..>_data.tar.gz– entire date sequence in raw data format (AEDAT2.0- DVS, images - RGB-D, point clouds in pcd files - LIDAR, and IMUcsvfiles with original sensor timestamps). Timestamp conversion formulas are available.<..sequence_id..>_rawcalib_data.tar.gz– recorded fragments that can be used to perform the calibration independently (intrinsic, extrinsic and time alignment).<..sequence_id..>_rosbags.tar.gz– main sequence inROS bagformat. All sensors timestamps are aligned with DVS with an accuracy of less than 1 ms.

The contents of each archive are described below..

Raw format data

The archive <..sequence_id..>_data.tar.gz contains the following files and folders:

./meta-data/- all the useful information about the sequence./meta-data/meta-data.md- detailed information about the sequence, sensors, files, and data formats./meta-data/cad_model.pdf- sensors placement./meta-data/<...>_timeconvs.json- coefficients for timestamp conversion formulas./meta-data/ground-truth/- movement ground-truth data, calculated using 3 different Lidar-SLAM algorithms (Cartographer, HDL-Graph, LeGo-LOAM)./meta-data/calib-params/- intrinsic and extrinsic calibration parameters./recording/- main sequence./recording/dvs/- DVS events and IMU data./recording/lidar/- Lidar point clouds and IMU data./recording/realsense/- Realsense camera RGB, Depth frames, and IMU data./recording/sensorboard/- environmental sensors data (temperature, humidity, air pressure)

Calibration data

The <..sequence_id..>_rawcalib_data.tar.gz archive contains the following files and folders:

./imu_alignments/- IMU recordings of the platform lifting before and after the main sequence (can be used for custom timestamp alignment)./solenoids/- IMU recordings of the solenoid vibrations before and after the main sequence (can be used for custom timestamp alignment)./lidar_rs/- Lidar vs Realsense camera extrinsic calibration by showing both sensors a spherical object (ball)./dvs_rs/- DVS and Realsense camera intrinsic and extrinsic calibration frames (checkerboard pattern)

ROS Bag format data

There are six rosbag files for each scene, their contents are as follows:

<..sequence_id..>_dvs.bag(topics:/dvs/camera_info,/dvs/events,/dvs/imu, and accordingly message types:sensor_msgs/CameraInfo,dvs_msgs/EventArray,sensor_msgs/Imu).<..sequence_id..>_lidar.bag(topics:/lidar/imu/acc,/lidar/imu/gyro,/lidar/pointcloud, and accordingly message types:sensor_msgs/Imu,sensor_msgs/Imu,sensor_msgs/PointCloud2).<..sequence_id..>_realsense.bag(topics:/realsense/camera_info,/realsense/depth,/realsense/imu/acc,/realsense/imu/gyro,/realsense/rgb,/tf, and accordingly message types:sensor_msgs/CameraInfo,sensor_msgs/Image,sensor_msgs/Imu,sensor_msgs/Imu,sensor_msgs/Image,tf2_msgs/TFMessage).<..sequence_id..>_sensorboard.bag(topics:/sensorboard/air_pressure,/sensorboard/relative_humidity,/sensorboard/temperature, and accordingly message types:sensor_msgs/FluidPressure,sensor_msgs/RelativeHumidity,sensor_msgs/Temperature).<..sequence_id..>_trajectories.bag(topics:/cartographer,/hdl,/lego_loam, and accordingly message types:geometry_msgs/PoseStamped,geometry_msgs/PoseStamped,geometry_msgs/PoseStamped).<..sequence_id..>_data_for_realsense_lidar_calibration.bag(topics:/lidar/pointcloud,/realsense/camera_info,/realsense/depth,/realsense/rgb,/tf, and accordingly message types:sensor_msgs/PointCloud2,sensor_msgs/CameraInfo,sensor_msgs/Image,sensor_msgs/Image,tf2_msgs/TFMessage).

Version history

22.06.2021.

- Realsense data now also contain depth png images with 16-bit depth, which are located in folder /recording/realsense/depth_native/

- Added data in rosbag format

Dataset Files

01_forest_video.mp4 (89.61 MB)

01_forest_data.tar.gz (6.70 GB)

01_forest_rawcalib_data.tar.gz (2.16 GB)

01_forest_rosbags.tar.gz (7.35 GB)

02_forest_video.mp4 (96.21 MB)

02_forest_data.tar.gz (6.93 GB)

02_forest_rawcalib_data.tar.gz (1.74 GB)

02_forest_rosbags.tar.gz (7.14 GB)

03_green_meadow_video.mp4 (52.97 MB)

03_green_meadow_data.tar.gz (3.00 GB)

03_green_meadow_rawcalib_data.tar.gz (1.91 GB)

03_green_meadow_rosbags.tar.gz (3.54 GB)

04_green_meadow_video.mp4 (53.43 MB)

04_green_meadow_data.tar.gz (3.09 GB)

04_green_meadow_rawcalib_data.tar.gz (2.11 GB)

04_green_meadow_rosbags.tar.gz (3.87 GB)

05_road_asphalt_video.mp4 (8.85 MB)

05_road_asphalt_data.tar.gz (2.02 GB)

05_road_asphalt_rawcalib_data.tar.gz (1.52 GB)

05_road_asphalt_rosbags.tar.gz (1.91 GB)

06_plantation_video.mp4 (48.18 MB)

06_plantation_data.tar.gz (4.81 GB)

06_plantation_rawcalib_data.tar.gz (2.35 GB)

06_plantation_rosbags.tar.gz (5.65 GB)

07_plantation_video.mp4 (49.24 MB)

07_plantation_data.tar.gz (4.85 GB)

07_plantation_rawcalib_data.tar.gz (2.30 GB)

07_plantation_rosbags.tar.gz (5.65 GB)

08_plantation_water_video.mp4 (85.47 MB)

08_plantation_water_data.tar.gz (9.00 GB)

08_plantation_water_rawcalib_data.tar.gz (2.44 GB)

08_plantation_water_rosbags.tar.gz (9.57 GB)

09_cattle_farm_video.mp4 (36.01 MB)

09_cattle_farm_data.tar.gz (3.61 GB)

09_cattle_farm_rawcalib_data.tar.gz (1.27 GB)

09_cattle_farm_rosbags.tar.gz (3.57 GB)

10_cattle_farm_video.mp4 (36.97 MB)

10_cattle_farm_data.tar.gz (3.55 GB)

10_cattle_farm_rawcalib_data.tar.gz (1.22 GB)

10_cattle_farm_rosbags.tar.gz (3.49 GB)

11_cattle_farm_feed_table_video.mp4 (19.74 MB)

11_cattle_farm_feed_table_data.tar.gz (2.75 GB)

11_cattle_farm_feed_table_rawcalib_data.tar.gz (1.10 GB)

11_cattle_farm_feed_table_rosbags.tar.gz (2.73 GB)

12_cattle_farm_feed_table_video.mp4 (19.61 MB)

12_cattle_farm_feed_table_data.tar.gz (2.60 GB)

12_cattle_farm_feed_table_rawcalib_data.tar.gz (1.16 GB)

12_cattle_farm_feed_table_rosbags.tar.gz (2.69 GB)

13_ditch_video.mp4 (19.18 MB)

13_ditch_data.tar.gz (1.79 GB)

13_ditch_rawcalib_data.tar.gz (1.59 GB)

13_ditch_rosbags.tar.gz (2.27 GB)

14_ditch_video.mp4 (80.89 MB)

14_ditch_data.tar.gz (4.24 GB)

14_ditch_rawcalib_data.tar.gz (1.21 GB)

14_ditch_rosbags.tar.gz (4.28 GB)

15_young_pines_video.mp4 (38.60 MB)

15_young_pines_data.tar.gz (3.24 GB)

15_young_pines_rawcalib_data.tar.gz (1.22 GB)

15_young_pines_rosbags.tar.gz (3.34 GB)

16_winter_cereal_field_video.mp4 (38.22 MB)

16_winter_cereal_field_data.tar.gz (2.84 GB)

16_winter_cereal_field_rawcalib_data.tar.gz (1.21 GB)

16_winter_cereal_field_rosbags.tar.gz (2.91 GB)

17_winter_cereal_field_video.mp4 (32.77 MB)

17_winter_cereal_field_data.tar.gz (2.60 GB)

17_winter_cereal_field_rawcalib_data.tar.gz (1.16 GB)

17_winter_cereal_field_rosbags.tar.gz (2.64 GB)

18_winter_rapeseed_field_video.mp4 (52.88 MB)

18_winter_rapeseed_field_data.tar.gz (3.13 GB)

18_winter_rapeseed_field_rawcalib_data.tar.gz (1.10 GB)

18_winter_rapeseed_field_rosbags.tar.gz (3.04 GB)

19_winter_rapeseed_field_video.mp4 (49.73 MB)

19_winter_rapeseed_field_data.tar.gz (2.79 GB)

19_winter_rapeseed_field_rawcalib_data.tar.gz (1,023.67 MB)

19_winter_rapeseed_field_rosbags.tar.gz (2.62 GB)

20_field_with_a_cow_video.mp4 (90.37 MB)

20_field_with_a_cow_data.tar.gz (4.94 GB)

20_field_with_a_cow_rawcalib_data.tar.gz (1.25 GB)

20_field_with_a_cow_rosbags.tar.gz (4.67 GB)

21_field_with_a_cow_video.mp4 (120.80 MB)

21_field_with_a_cow_data.tar.gz (5.13 GB)

21_field_with_a_cow_rawcalib_data.tar.gz (1.31 GB)

21_field_with_a_cow_rosbags.tar.gz (5.12 GB)

Open Access dataset files are accessible to all logged in users. Don't have a login? Create a free IEEE account. IEEE Membership is not required.