human activity recognition

Our dataset has a total of 8 actions, 7 people(P1-P7), and 3 experimental environments(Room-A,Room-B,Room-C). There are a total of 3 directions in each environment, with 5 samples of each action taken for each person in each direction, so the number of samples is 360(samples/person)*7 = 2520.

- Categories:

497 Views

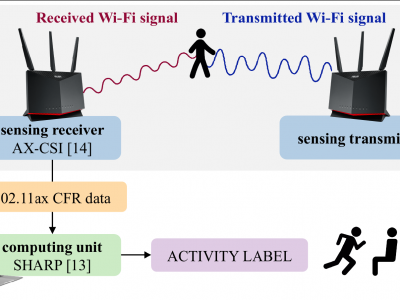

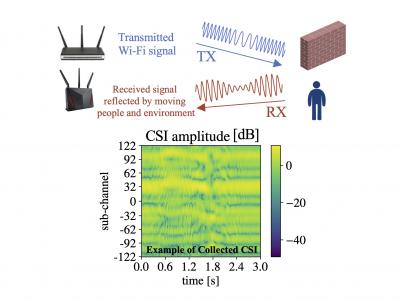

497 ViewsThe dataset includes channel frequency response (CFR) data collected through an IEEE 802.11ax device for human activity recognition. This is the first dataset for Wi-Fi sensing with the IEEE 802.11ax standard which is the most updated Wi-Fi version available in commercial devices. The dataset has been collected within a single environment considering a single person as the purpose of the study was to evaluate the impact of communication parameters on the performance of sensing algorithms.

- Categories:

1714 Views

1714 ViewsThe complete description of the dataset can be found at: https://arxiv.org/abs/2305.03170

- Categories:

5625 Views

5625 ViewsFallAllD is a large open dataset of human falls and activities of daily living simulated by 15 participants. FallAllD consists of 26420 files collected using three data-loggers worn on the waist, wrist and neck of the subjects. Motion signals are captured using an accelerometer, gyroscope, magnetometer and barometer with efficient configurations that suit the potential applications e.g. fall detection, fall prevention and human activity recognition.

- Categories:

7993 Views

7993 ViewsPRECIS HAR represents a RGB-D dataset for human activity recognition, captured with the 3D camera Orbbec Astra Pro. It consists of 16 different activities (stand up, sit down, sit still, read, write, cheer up, walk, throw paper, drink from a bottle, drink from a mug, move hands in front of the body, move hands close to the body, raise one hand up, raise one leg up, fall from bed, and faint), performed by 50 subjects.

- Categories:

3030 Views

3030 ViewsMulti-modal Exercises Dataset is a multi- sensor, multi-modal dataset, implemented to benchmark Human Activity Recognition(HAR) and Multi-modal Fusion algorithms. Collection of this dataset was inspired by the need for recognising and evaluating quality of exercise performance to support patients with Musculoskeletal Disorders(MSD).The MEx Dataset contains data from 25 people recorded with four sensors, 2 accelerometers, a pressure mat and a depth camera.

- Categories:

1516 Views

1516 ViewsA new dataset named Sanitation is released to evaluate the HAR algorithm’s performance and benefit the researchers in this field, which collects seven types of daily work activity data from sanitation workers.We provide two .csv files, one is the raw dataset “sanitation.csv”, the other is the pre-processed features dataset which is suitable for machine learning based human activity recognition methods.

- Categories:

968 Views

968 ViewsThis dataset is a highly versatile and precisely annotated large-scale dataset of smartphone sensor data for multimodal locomotion and transportation analytics of mobile users.

The dataset comprises 7 months of measurements, collected from all sensors of 4 smartphones carried at typical body locations, including the images of a body-worn camera, while 3 participants used 8 different modes of transportation in the southeast of the United Kingdom, including in London.

- Categories:

1521 Views

1521 Views

Recognition of human activities is one of the most promising research areas in artificial intelligence. This has come along with the technological advancement in sensing technologies as well as the high demand for applications that are mobile, context-aware, and real-time. We have used a smart watch (Apple iWatch) to collect sensory data for 14 ADL activities (Activities of Daily Living).

- Categories:

1482 Views

1482 Views